Skepticism About DeepMind's "Grandmaster-Level" Chess Without Search

I'm not even sure that I couldn't manage a draw against it

The paper, published last week

First, addressing misconceptions that could come from the title or from the paper's framing as relevant to LLM scaling:

The model didn't learn from observing many move traces from high-level games. Instead, the authors trained a 270M-parameter model to map

(board state, legal move)pairs to Stockfish 16's predicted win probability after playing the move. This can be described as imitating the play of an oracle that reflects Stockfish's ability at 50 milliseconds per move. Then they evaluated a system that made the model's highest-value move for a given position.The system resulted in some quirks that required workarounds during play.

The board states were encoded in FEN notation, which doesn't provide information about which previous board states have occurred; this is relevant in a small number of situations because players can claim an immediate draw when a board state is repeated three times.

The model is a classifier, so it doesn't actually predict Stockfish win-probability but instead predicts the bin (out of 128) into which that probability falls. So in a very dominant position (e.g. king and rook versus a lone king) the model doesn't distinguish between moves that lead to mate and can alternate between winning lines without ever achieving checkmate. Some of these draws-from-winning-positions were averted by letting Stockfish finish the game if the top five moves according to both the model and Stockfish had a win probability above 99%.

Some comments have been critical on these grounds, like Yoav Goldberg's "Grand-master Level Chess without Search: Modeling Choices and their Implications" (h/t Max Nadeau). But these don't seem that severe to me. Representing the input as a sequence of board states rather than the final board state would have also been a weird choice, since in fact the specific moves that led to a given board state basically doesn't affect the best move at all. It's true that the model didn't learn about draws by repetition, and maybe Yoav's stronger claim that it would be very difficult to learn that rule observationally is also true—especially since such draws have to be claimed by a player and don't happen automatically (even on Lichess). But it's possible to play GM-level chess without knowing this rule, especially if your opponents aren't exploiting your ignorance.

But I'm skeptical that the model actually plays GM-level chess in the normal sense. You need to reach a FIDE Elo of 2500 (roughly 2570 USCF) to become a grandmaster. I would guess Lichess ratings are 200-300 points higher than skill-matched FIDE ratings, but this becomes a bit unclear to me after a FIDE rating of ~2200 or so, where I could imagine a rating explosion because the chess server will mostly match you with players who are worse than you (at the extreme, Magnus Carlsen's peak Lichess rating is 500 points higher than his peak FIDE rating).

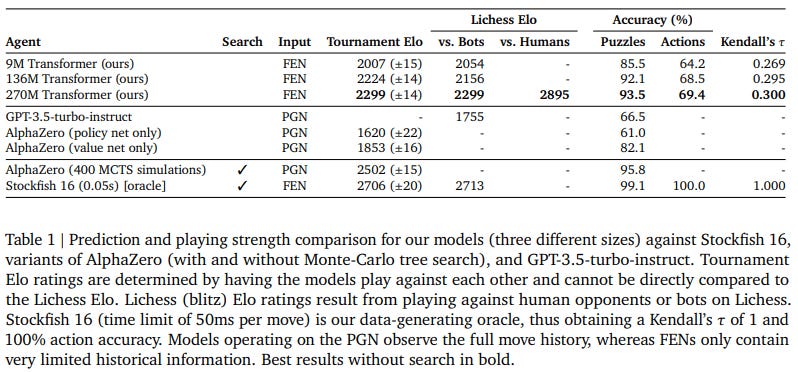

Take the main table of the paper:

They don't describe their evaluation methodology in detail but it looks like they just created a Lichess bot. Lichess bots won't pair with human players that use the matchmaking system on the home page; you have to navigate to Community → Online Players → Online Bots and then challenge them to a game. So there are three results here:

Lichess Elo (2299): This is the column labeled "vs. Bots" but it actually played 4.5% of its games against humans. Most human players on Lichess don't challenge bots, so most of the games were against other bots.

Tournament Elo: This was a tournament between a bunch of AlphaZero-based models, smaller versions of their model, and Stockfish. The Elo scoring was anchored so that the main 270M-parameter model had a score of 2299, which means that the best interpretation of the results is that the model performance is roughly 400 Elo points worse than Stockfish 16.

Lichess Elo vs. Humans (2895): This is based on ~150 games where their model only accepted challenges from human players. The class of players who play strong bots is probably very unlike the normal player distribution. In fact I don't have a good model at all of why someone would take a lot of interest in challenging random bots.

Even a Lichess rating of 2900 might only barely correspond to grandmaster-level play. A Lichess rating of 2300 could correspond to 2000-level (FIDE) play.

They mention two other reasons for the discrepancy:

Humans tend to resign when they're crushed, while bots sometimes manage to draw or win if they luck into the indecisiveness problem mentioned in 3.b above.

"Based on preliminary (but thorough) anecdotal analysis by a [FIDE ~2150 player], our models make the occasional tactical mistake which may be penalized qualitatively differently (and more severely) by other bots compared to humans."

They apparently recruited a bunch of National Master-level players (FIDE ~2150) to make "qualitative assessments" of some of the model's games; they gave comments like "it feels more enjoyable than playing a normal engine" and that it was "as if you are not just hopelessly crushed." Hilariously, the authors didn't benchmark the model against these players . . .

Random other notes:

Leela Chess Zero has been using a transformer-based architecture for some time, but it's not mentioned at all in the paper

Their tournament used something called BayesElo while Lichess uses Glicko 2; this probably doesn't matter

I was also interested in that headline since viewing chess similar to an LLM (using the position as the context vector, then using a transformer to predict the next move). After reading your skepticism, here are my thoughts:

1. Why does it matter if it was trained on stockfish moves rather than high level games? LLM tend to imitate human text, but I've seen papers on them being trained on multiplication (which can be done by a calculator), wouldn't stockfish be analogous to that since it far exceeds humans in chess.

I agree, it would be quite interesting to see a transformer that predicts which moves a human would make (https://maiachess.com/). Perhaps this paper is not that groundbreaking since LeelaChess is 2700 on Lichess using depth 1 (https://lichess.org/@/LazyBot).

2. I agree with the part about FEN, a way to mitigate that would be to include bins for mate in <3, <6, <12, <24. This will find mate is most simple endgames (perhaps not Bishop + Knight).

I think the most exciting possible use of LLM research on chess would be a way to store chess positions (and games) in a vector database. Chess2Vec already exists, but for instance an expanded version of Lichess' opening explorer could be used to find similar positions/games in the middle/end game. A chess win probability model that accounts for rating would also be interesting, perhaps by using NN on similar positions or MCTS or Markov Chains if possible.

Thank you for your interest in our paper, Arjun!

We actually beat a GM 6-0 in blitz.

Moreover, our bot played against humans of all levels, from beginners all the way to NMs, IMs, and GMs. So far, our bot only lost 2 games (out of 365).

Does that alleviate your concerns?